Use the widget below to experiment with SAM 3D Objects. You can detect COCO classes such as people, vehicles, animals, household items.

SAM 3D Objects is a 3D reconstruction model. With this model, you can take an image, select an object within the image, and generate a 3D model that can be manipulated with computer software.

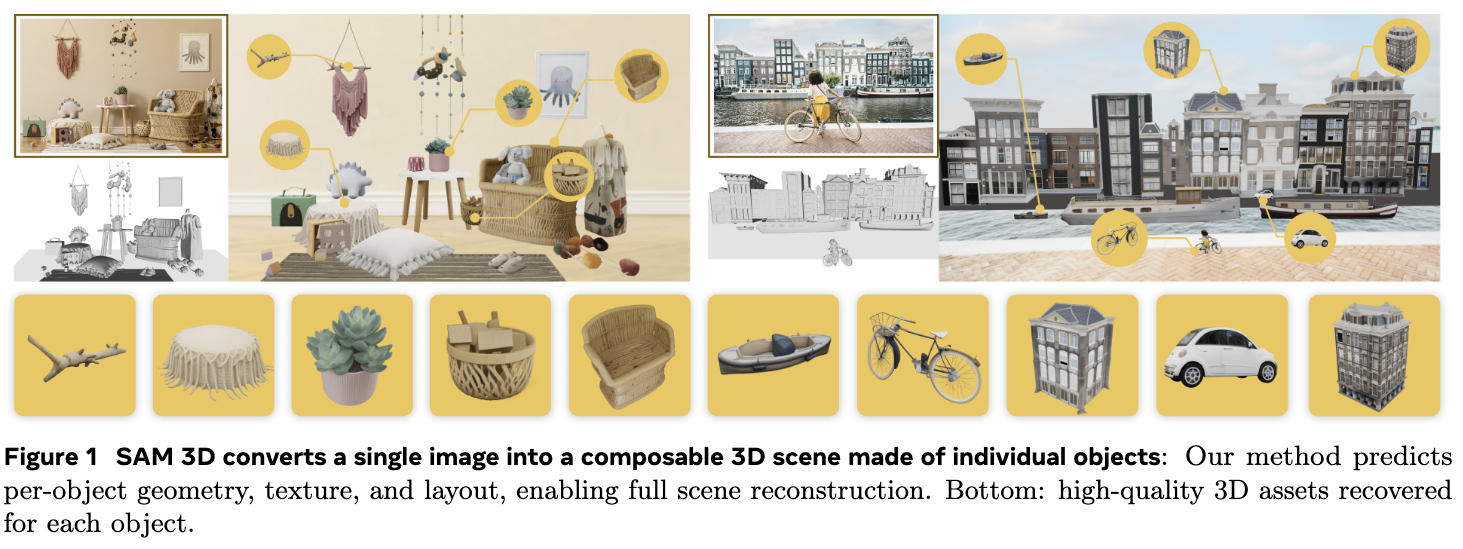

For example, you can use SAM 3D Objects to reconstruct a building, an object (i.e. a coffee cup or a plant), a vehicle, and more. The below image shows various examples of objects generated from provided images.

SAM 3D Objects was developed using a custom data engine that involved using human reviewers to verify the quality of generated meshes, and 3D artists to assist when required. According to Meta, the data engine developed by the research team working on SAM 3 was used to "annotate physical world images with 3D object shape, texture, and layout at unprecedented scale for 3D, annotating almost 1 million distinct images and generating approximately 3.14 million model-in-the-loop meshes."

The SAM 3D Objects source code is available on GitHub, licensed under a custom license. This repository includes the code you need to load an image, run the model on an image, and return the generated 3D representations of selected objects.

SAM 3D Objects is part of the SAM 3 series of models. This includes SAM 3, the flagship model for zero-shot image segmentation, SAM 3 Objects for object reconstruction and SAM 3D Body for human mesh reconstruction.

SAM 3D Objects

is licensed under a

Custom license

license.

You can use Roboflow Inference to deploy a

SAM 3D Objects

API on your hardware. You can deploy the model on CPU (i.e. Raspberry Pi, AI PCs) and GPU devices (i.e. NVIDIA Jetson, NVIDIA T4).

Below are instructions on how to deploy your own model API.