Use the widget below to experiment with GPT-5. You can detect COCO classes such as people, vehicles, animals, household items.

On August 7th, 2025, OpenAI released GPT-5, the newest model in their GPT series. GPT-5 has advanced reasoning capabilities and, like many recent models by OpenAI, multimodal support. This means that you can both prompt GPT-5 with one or more images and ask for an answer, but also prompt the model to spend more time reasoning before answering.

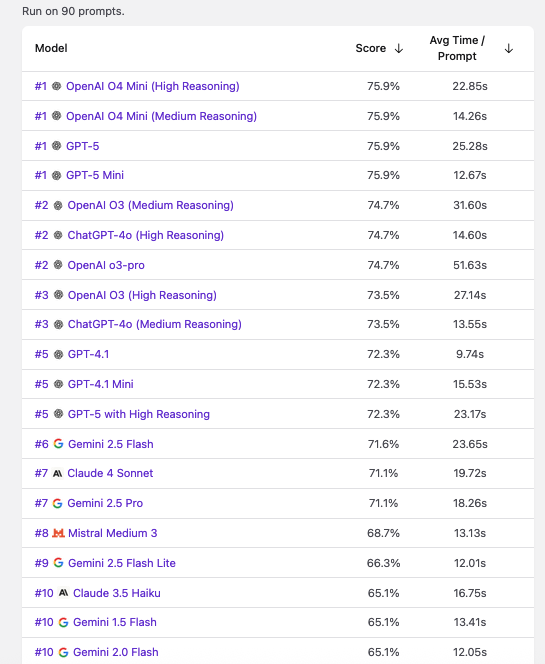

This combination – of reasoning and visual capabilities – has been present in several “o” models by OpenAI. Reasoning models consistently score high on Vision Checkup, the open source qualitative vision model evaluation tool. Indeed, the top four models on the leaderboard are all reasoning models.

On our tests, GPT-5 tied for first on Vision Checkup with GPT o4 Mini:

GPT-5

is licensed under a

license.

You can use Roboflow Inference to deploy a

GPT-5

API on your hardware. You can deploy the model on CPU (i.e. Raspberry Pi, AI PCs) and GPU devices (i.e. NVIDIA Jetson, NVIDIA T4).

Below are instructions on how to deploy your own model API.